4th-year PhD Student @ AMLab, University of Amsterdam

About Me

I am a final year PhD student in the Amsterdam Machine Learning Lab (opens new window) supervised by Eric Nalisnick (opens new window) (Johns Hopkins University). I also closely collaborate with Emtiyaz Khan (opens new window) (RIKEN AIP) and the Approximate Bayesian Inference Team (opens new window). I am interested in building safe, interpretable and robust AI systems. My work is rooted in Bayesian principles, uncertainty quantification, local sensitivity measures and human-AI interplay.

Short Bio

PhD candidate at Amsterdam Machine Learning Lab (opens new window), University of Amsterdam, Netherlands

Sept 2021 - presentResearch intern at Qualcomm AI Research (opens new window), Netherlands

July - Nov 2024Research assistant at Approximate Bayesian Inference Team (opens new window), RIKEN Centre for Advanced Intelligence Project (opens new window), Tokyo, Japan

May 2019 - July 2021, Feb 2024 - July 2024 (student trainee)Young Graduate Trainee at Advanced Concepts Team (opens new window), European Space Agency (opens new window), Netherlands

Sept 2017 - Dec 2018MSc in Artificial Intelligence (ML & CompNeuro track) at University of Edinburgh, United Kingdom

2016 - 2017BEng in Mathematics and Computer Science at Imperial College London, United Kingdom

2013 - 2016

Recent News

- [04/2025] Posters presentations at ICLR 2025 (opens new window) and AABI 2025 (opens new window) in Singapore

- [03/2025] Accepted paper at AABI 2025 (opens new window) on Revisiting Influence Functions for Latent Variable Models using Variational Bayes

- [01/2025] Accepted paper at ICLR 2025 (opens new window) on Approximating Full Conformal Prediction for Neural Network Regression with Gauss-Newton Influence

- [07/2024] Joined Qualcomm AI Research as a summer intern in the Distributed Learning & AI Safety Team working with Christos Louizos (opens new window) and Alvaro Correia (opens new window)

- [06/2024] Participated in the 2nd Bayes-Duality Workshop (opens new window) in Japan between June 12-28 (talk + part of organizing team)

- [05/2024] Received Student Paper Highlight (opens new window) award for oral paper at AISTATS 2024 (opens new window)

- [03/2024] Poster presentation at Deep Learning: Theory, Applications, and Implications workshop (opens new window) in Tokyo

- [02/2024] (Re-)joined RIKEN AIP in Tokyo as a student trainee (opens new window) working with Emtiyaz Khan (opens new window) (until July 2024)

- [01/2024] Accepted paper (oral) at AISTATS 2024 (opens new window) on Learning to Defer to a Population: A Meta-Learning Approach

- [12/2023] Poster presentation at NeurIPS 2023 (opens new window) in New Orleans

Papers

Approximating Full Conformal Prediction for Neural Network Regression with Gauss-Newton Influence

13th International Conference on Learning Representations (ICLR), 2025

paper (opens new window) / code (opens new window) / poster / slides

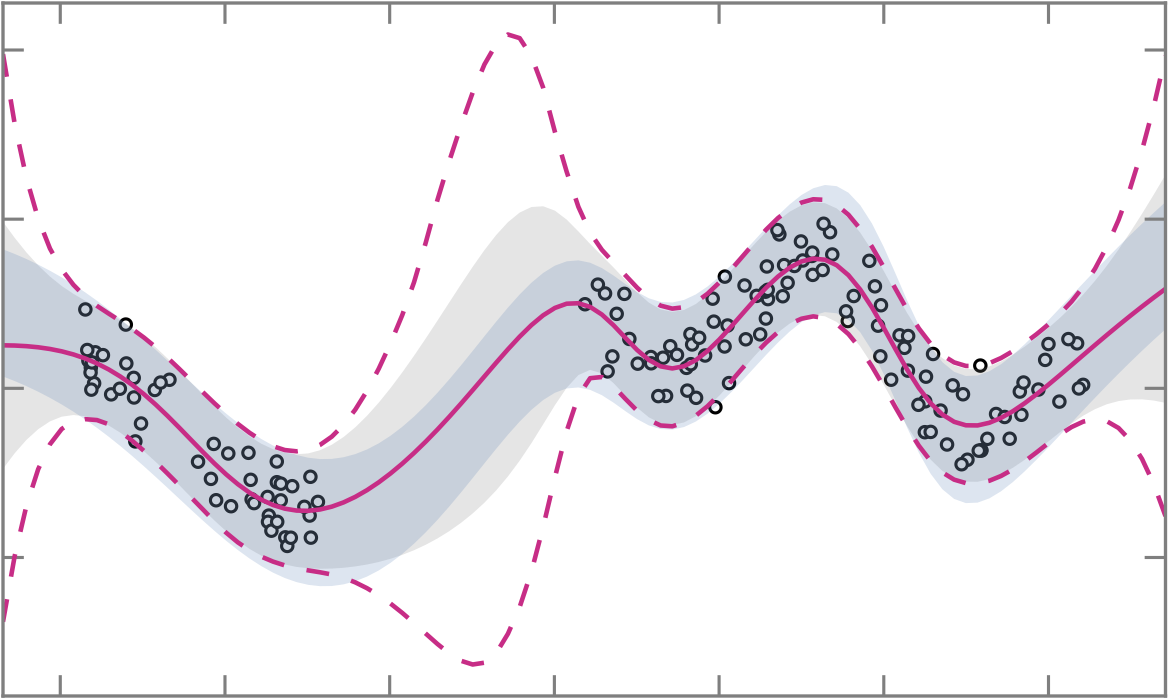

In this work, we construct prediction intervals for neural network regressors post-hoc without held-out data. This is achieved by approximating the full conformal prediction method (full-CP). Whilst full-CP nominally requires retraining the model for every test point and candidate label, we propose to train just once and locally perturb model parameters using Gauss-Newton influence to approximate the effect of retraining. On standard regression benchmarks and bounding box localization, we show the resulting prediction intervals are locally-adaptive and often tighter than those of split-CP.

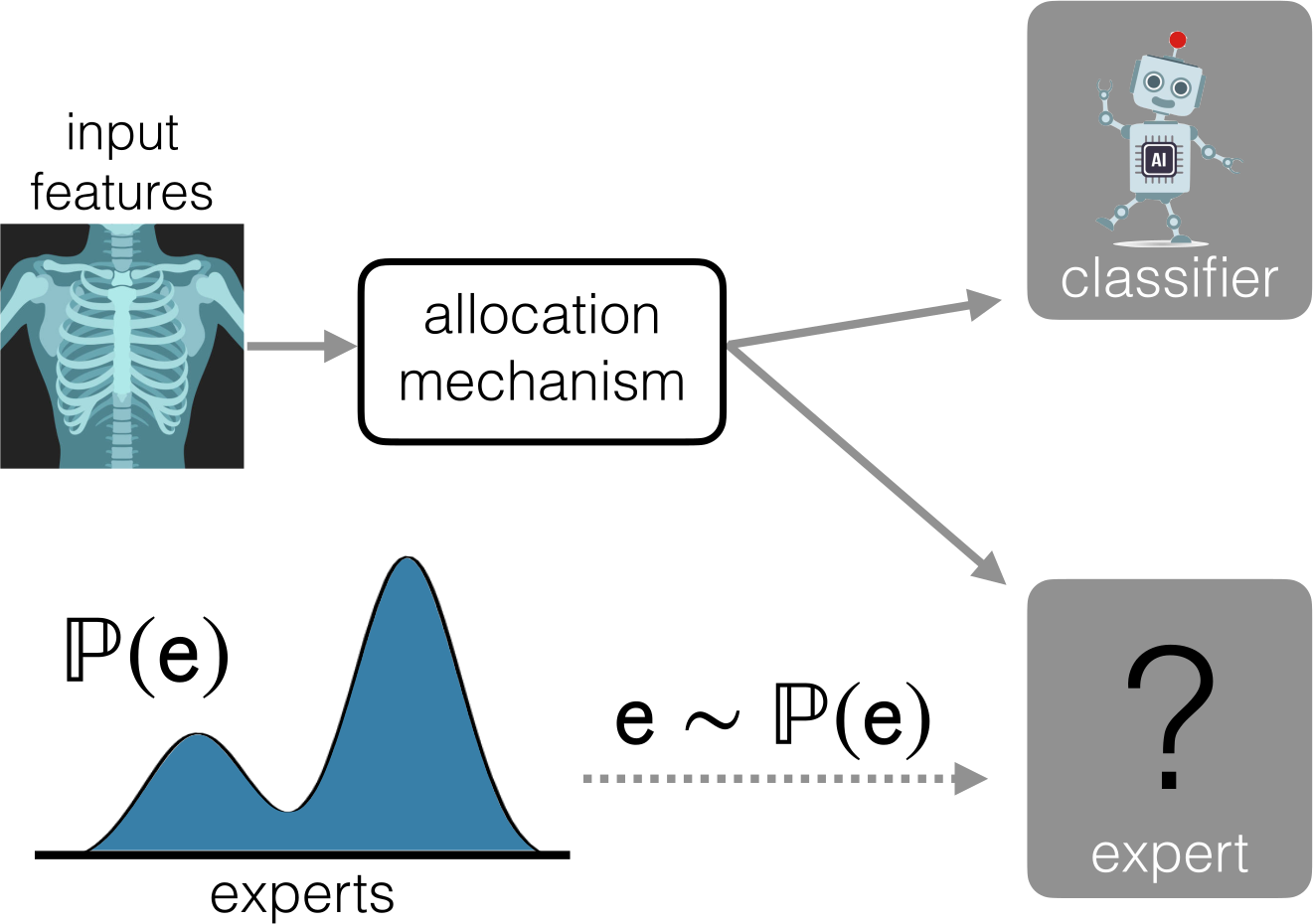

Learning to Defer to a Population: A Meta-Learning Approach

27th International Conference on Artificial Intelligence and Statistics (AISTATS), 2024

Oral presentation & Outstanding Student Paper award (top-1% of accepted papers)

paper (opens new window) / arXiv (opens new window) / code (opens new window) / poster / slides

We formulate a learning to defer (L2D) system that can cope with never-before-seen experts at test-time. We accomplish this by using meta-learning, considering both optimization- and model-based variants. Given a small context set to characterize the currently available expert, our framework can quickly adapt its deferral policy. For the model-based approach, we employ an attention mechanism that is able to look for points in the context set that are similar to a given test point, leading to an even more precise assessment of the expert’s abilities.

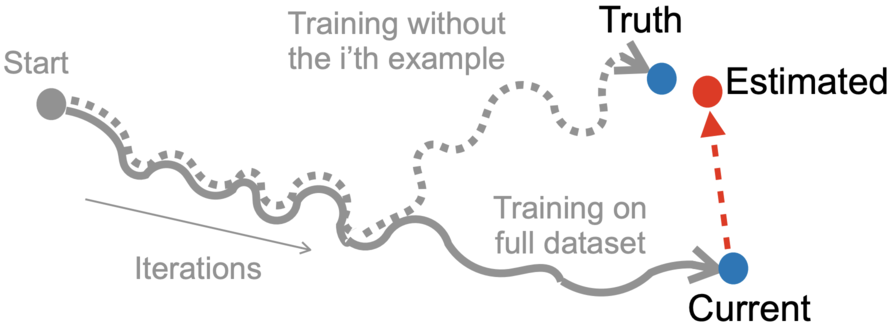

The Memory-Perturbation Equation: Understanding Model's Sensitivity to Data

37th Conference on Neural Information Processing Systems (NeurIPS), 2023

ICML 2023 Workshop on Principles of Duality for Modern Machine Learning

paper (opens new window) / arXiv (opens new window) / code (opens new window) / poster (opens new window)

We present the Memory-Perturbation Equation (MPE) which relates model’s sensitivity to perturbation in its training data. Derived using Bayesian principles, the MPE unifies existing sensitivity measures, generalizes them to a wide-variety of models and algorithms, and unravels useful properties regarding sensitivities. Our empirical results show that sensitivity estimates obtained during training can be used to faithfully predict generalization on unseen test data.

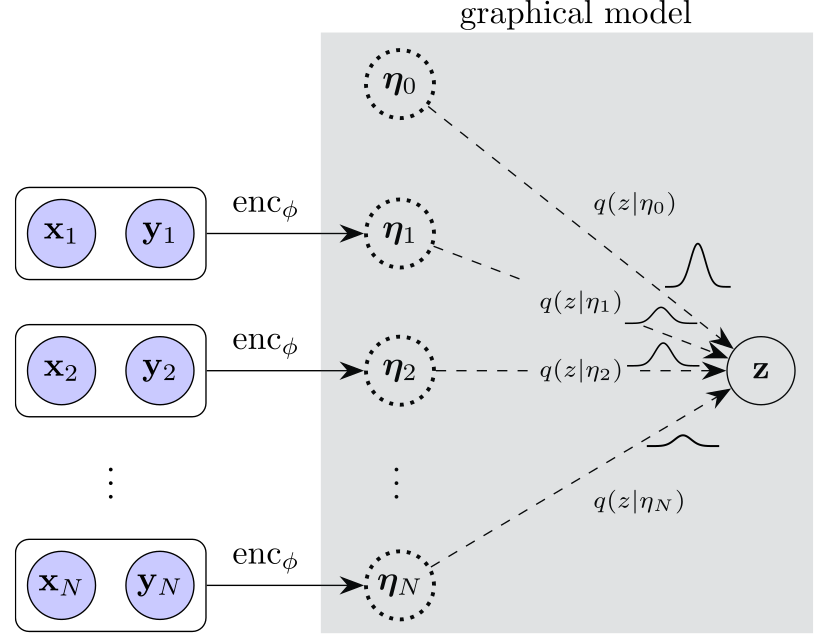

Exploiting Inferential Structure in Neural Processes

39th Conference on Uncertainty in Artificial Intelligence (UAI), 2023

5th Workshop on Tractable Probabilistic Modeling at UAI 2022

paper (opens new window) / arXiv (opens new window) / poster (opens new window)

This work provides a framework that allows the latent variable of Neural Processes to be given a rich prior defined by a graphical model. These distributional assumptions directly translate into an appropriate aggregation strategy for the context set. We describe a message-passing procedure that still allows for end-to-end optimization with stochastic gradients. We demonstrate the generality of our framework by using mixture and Student-t assumptions that yield improvements in function modelling and test-time robustness.

Talks

5. How to Build Transparent and Trustworthy AI

2nd Bayes-Duality Workshop (opens new window) (Japan), 06/2024

Jointly with Emtiyaz Khan (opens new window) (main speaker)

video (opens new window) /

4. Learning to Defer to a Population: A Meta-Learning Approach (Oral presentation)

27th International Conference on Artificial Intelligence and Statistics (opens new window) (Spain), 05/2024

slides /

3. Memory Maps to Understand Models

Dutch Society of Pattern Recognition and Image Processing: Fall Meeting on Anomaly Detection (opens new window) (Amsterdam, Netherlands), 11/2023 (Oral presentation)

1st Bayes-Duality Workshop (opens new window) (Japan), 06/2023

ESA Advanced Concepts Team Science Coffee (opens new window) (Netherlands), 04/2023

slides /

2. Adaptive and Robust Learning with Bayes

NeurIPS Bayesian Deep Learning workshop (opens new window) (virtual), 12/2021

Jointly with Emtiyaz Khan (opens new window) (main speaker) & Siddharth Swaroop (opens new window)

video (opens new window) / slides (opens new window)

1. Identifying Memorable Experiences of Learning Machines

RIKEN AIP Open Seminar (opens new window) (virtual), 03/2021

video (opens new window) / slides